Before discussing serious issues like top data challenges, it is logical that we first understand what big data is. Sorry to break it to you but there’s no one-size-fits-all in big data. Ironic, I know. But you can’t identify big data problems without knowing what big data is to you first and foremost.

What one business may describe as big data may be quite different for another organization or the same organization in a year or two.

At the same time, what a small company may describe as big data may not be regarded as the same by large multinationals. For this reason, it would not make sense to define big data using gigabytes, terabytes, or even petabytes.

Image Source: Quora

So, What Is Big Data?

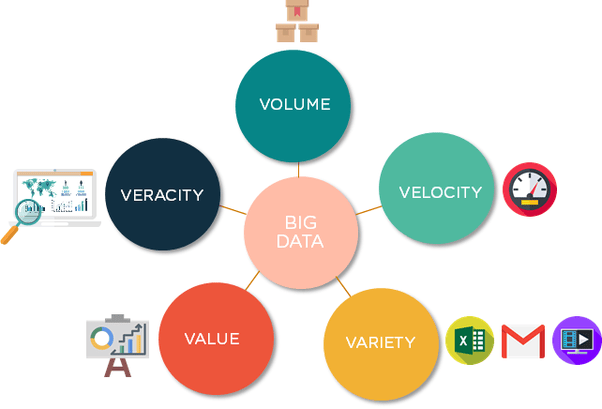

The majority of experts define big data using three ‘V’ terms. Therefore, your organization has big data if your data stores bear the below characteristics.

- Volume – your data is so large that your company faces processing, monitoring, and storage challenges. With trends such as mobility, the Internet of Things (IoT), social media, and eCommerce in place, much information is being generated. As a result, almost every organization satisfies this criterion.

- Velocity – does your firm generate new data at a high speed, and you are required to respond in real-time? If yes, then your organization has the velocity associated with big data. Most companies involved with technologies such as social media, the Internet of Things, and eCommerce meet this criterion.

- Variety – your data’s variety has characteristics of big data if it stays in many different formats. Typically, big data stores include word-processing documents, email messages, presentations, images, videos, and data kept in structured RDBMSes (Relational Database Management Systems).

There are other ‘V’ terms, but we shall focus on these three for now.

The fact that big data has dramatically transformed the growth and success of businesses is irrefutable. And it’s even better than its positive impact being felt in all fields across the globe.

Today, companies can collect and analyze vast amounts of data unlocking unparalleled potential. Organizations are achieving what they previously imagined unachievable, from forecasting market trends to security optimizations and reaching new demographics.

However, big data analytics doesn’t come without its challenges. But thankfully, experts are doing the impossible to discover solutions for these problems.

Image Source: Quora

Due to aspects such as security, privacy, compliance issues, and ethical use, data oversight can be a challenging affair. However, the management problems of big data become more extensive due to the unpredictable and unstructured nature of the data.

This post highlights the top nine significant big data challenges and how to solve them.

They are:

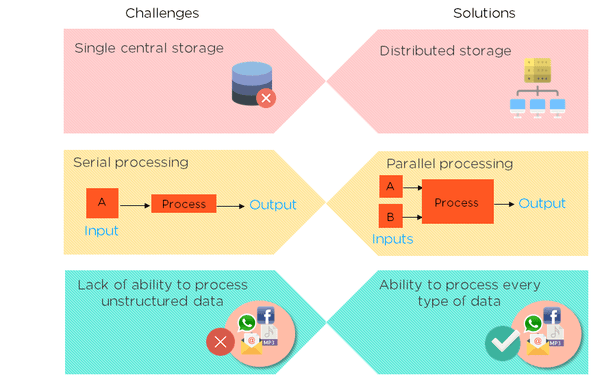

1. Managing Voluminous Data

The speed at which big data is being created quickly surpasses the rate at which computing and storage systems are being developed. A report by IDC revealed that the amount of data available by the end of 2020 will be enough to fully occupy a stack of tablets measuring 6.6 times the distance between the moon and the Earth.

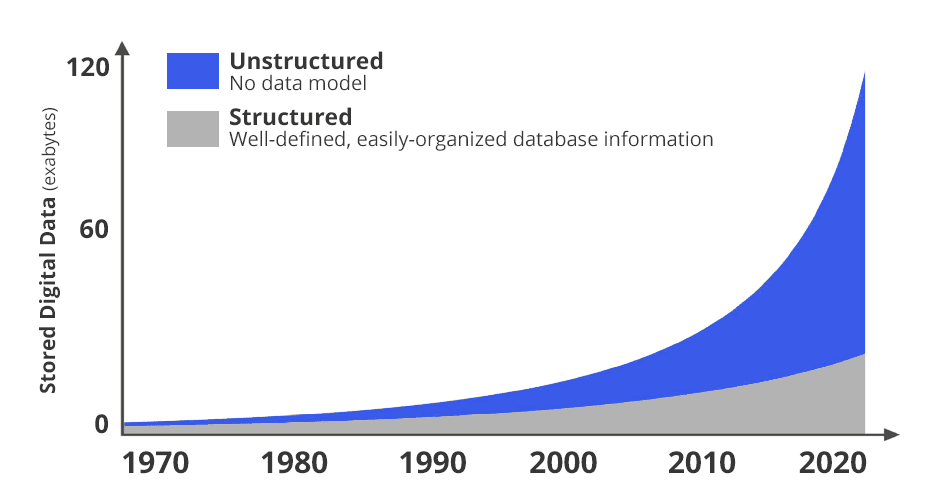

As mentioned earlier, handling unstructured data is becoming quite problematic – from 31 percent in 2015, 45 percent in 2016, and 90 percent in 2019. Analysts argue that the amount of unstructured data generated grows by 55 percent to 65 percent yearly.

What Is Unstructured Data?

Unstructured data is data that cannot be easily stored in the traditional column-row database or spreadsheets such as Microsoft Excel tables. For these reasons, it becomes tough to analyze, besides being difficult to search. With all these challenges, it explains why, until recently, organizations didn’t consider unstructured data of any use.

Image Source: M-Files

The unstructured data-related problems add issues to the growth of complex data formats such as documents, video, audio, social media, and new smart devices.

The IDC report also revealed that online business transactions will grow to approximately 450 billion daily. On the other hand, a Cisco research report showed that in five years, the number of connected devices will reach 50 billion.

These predictions indicate the generation of massive data, and that businesses should prepare accordingly.

Solution: Unstructured Data Analytics Tools

Businesses can make use of unstructured data analytics tools specially created to help users of big data pick insights inside the unstructured data. These tools are powered by AI (artificial intelligence).

Through AI algorithms, businesses can generate meaningful information from large volumes of unstructured data created daily.

Organizations that have discovered this secret use tools and software in mining, processing, integrating, storing, tracking, indexing, and reporting business insights from unfiltered unstructured data. Without these tools, organizations cannot handle unstructured data efficiently.

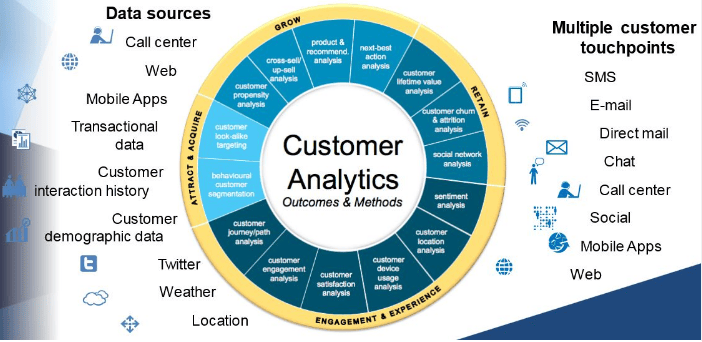

Customer analytics is an excellent example of the usage of unstructured data.

Image source: iHub

Your ability to integrate unstructured data gathered from many sources like online product reviews, social media mentions, and call center transcripts, combined with the deployment of AI to identify information patterns in these sources, gives you the intelligence needed to make game-changing decisions capable of improving customer relationships and sales.

Companies that have been using unstructured data know that it is a treasure trove when it comes to marketing intelligence.

Unstructured data tools help users speedily scan through robust datasets, identify customer behavior, and discover the most compelling products or services for their target audience.

Product development and aspects such as determining appropriate marketing initiatives derive immense benefits from these revelations.

Compliance issues can be expensive in terms of money, time, and reputation for heavily regulated organizations.

Insights offered by unstructured data during the analysis of chatbot conversations and emails can help firms unmask regulatory problems before the issue gets out of hand and eats into the company’s reputation.

Sentiment analysis, natural language processing, speech-to-text conversions, and pattern recognition make this achievable via machine learning and AI algorithms.

Companies should consider replacing data silos with scalable data hubs to reap optimal benefits from unstructured data.

By investing in systems capable of storing, analyzing, and reporting data from a wide array of sources and sharing it with the decision-making unit, organizations can lay bare the tremendous business value associated with unstructured data.

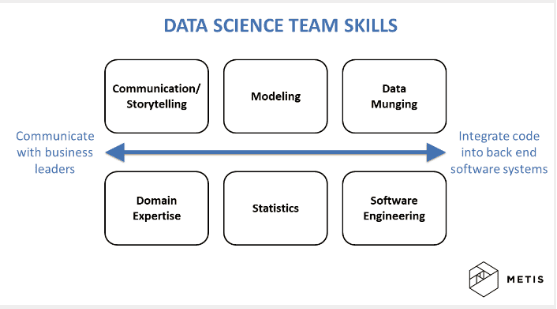

2. Data Scientists Shortage

Only on infrequent occasions are the thinking of business leaders and data scientists as having the same status.

Analysts who are just beginning their careers are always deviating from the real value of business data, and, consequently, end up giving insights that fail to solve the issue at hand.

Then there is the problem of the limited number of data scientists capable of delivering value.

Image source: Inside Big Data

While surveys show that all professionals in the big data field are compensated exceptionally well, companies still have to deal with the difficulties of retaining top talent. Plus, training entry-level technicians is extremely expensive.

Solution: When There’s no Talent Available, Use Machines

To curb this situation, the majority of organizations are turning to self-service analysis solutions that utilize machine learning, AI, and automation to extract meaning from data by involving minimal manual coding. You can avoid this data scientist shortage by implementing data annotation in your business.

Those who haven’t resorted to this solution emphasize the importance of looking for talents where it is already present.

Instead of compromising and settling for under-skilled workers, be on the lookout for firms with a positive reputation, and as cruel as it sounds, poach talented workers who can be of assistance.

Otherwise, the adoption of automation, AI, and machine learning remains the most effective, inexpensive, and effective solution to the shortage of data scientists.

Or you can let platform integration do the heavy lifting for you. Create an account with SyncApps and integrate your CRM or ERP with your email automation platform. It costs 1% or less of what you’d pay a data analyst — and it creates better-looking, more accurate reports.

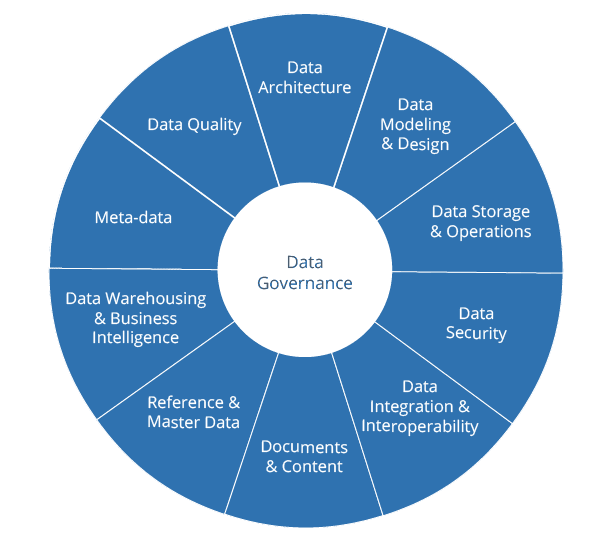

3. Data Governance and Security

Image source: Bi-Survey.com

Big data entails handling data from many sources. The majority of these sources use unique data collection methods and distinct formats.

As such, it is not unusual to experience inconsistencies even in data with similar value variables, and making adjustments is quite challenging.

For example, in the world of retail, the annual turnover value can be different based on the online sales tracker, the local POC, the company’s ERP, as well as the company accounts.

When dealing with such a situation, it is imperative to adjust the difference to ensure an appropriate answer. The process of achieving that is referred to as data governance.

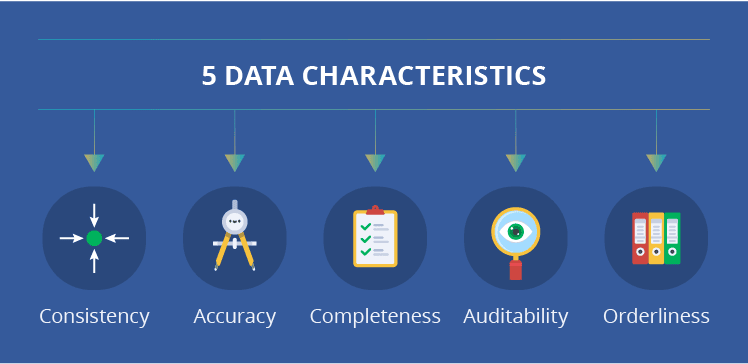

We cannot hide the fact that the accuracy of big data is questionable. It is never 100 percent accurate. While that’s not a critical issue, it doesn’t give companies the right to fail to control the reliability of our data.

And this is for good reason. Data may not only contain wrong information but duplication and contradictions are also possible. You already know that data of inferior quality can hardly offer useful insights or help identify precise opportunities for handling your business tasks.

So, how do you increase data quality?

The Solution:

The market is not short of data cleansing techniques. First things first, though: a company’s big data must have a proper model, and it’s only after you have it in place that you can proceed to do other things, such as:

- Making data comparisons based on the only point of truth, such as comparing variants of contacts to their spellings within the postal system database.

- Matching and merging records of the same entity.

Another thing that businesses must do is to define rules for data preparation and cleaning. Automation tools can also come in handy, especially when handling data prep tasks.

Furthermore, determine the data that your company doesn’t need and then place data purging automation before your data collection processes to get rid of it way before it tries to enter your network. Also, secure data with confidential computing, which safeguards sensitive information within your network.

While doing all this, always remember that you can never achieve 100 percent accuracy with big data. You have to be aware of this fact and then learn how to deal with it.

Image Source: Science Soft

4. Data Security and Integrity

Data security and integrity are yet another problem of big data. The presence of a huge number of channels as well as interconnecting nodes, increases the likelihood of hackers taking advantage of any system vulnerability.

At the same time, the criticality of data can lead to a minor mistake causing huge losses, and the more reasons, organizations must pursue the best security practices in their data handling systems.

The Solution:

The solution lies in prioritizing security over anything else when it comes to handling big data. Pay more attention to data security and integrity during the data system design stage. Considering the sensitive nature of data, incorporating VPN technology is another crucial step. It can help encrypt data transmission, enhance privacy, and safeguard sensitive information as it travels between different points, providing an additional layer of security for your data-centric operations. Do not wait until the later stages of development for you to integrate data security aspects.

When choosing a browser for your data-sensitive tasks, you might wonder is Brave better than Chrome or other web browsers. Assessing their features can guide you in selecting the browser that best fits your security preferences. Also, aim to implement tools designed to streamline key security processes and automate as much as possible to minimize the need for manual intervention. This should include attack surface management tools, shoring up your entire infrastructure.

If you fail to take care of it from the very beginning, issues of big data security will bite when it’s the last thing on your mind. Also, as big data technologies evolve – as they always do – do not neglect its security aspect.

Businesses should update data security concerns in their systems each time a system evolves. Failure to do that will put you into unimaginable data security problems, which can put the reputation of your company at stake.

Organizations are actively deploying advanced solutions with the potential to leverage machine learning to curb cybercrimes. The world expects many innovations inclined to data security and integrity due to the big demand for the same.

5. Too Many Options!

Just like any type of growing technology, the market for big data analytics has so many options that users find it confusing to settle for one or a few that they need.

With too many choices, it can be challenging to identify the exact big data technologies that are in line with your goals. This confusion happens even to individuals and businesses that are relatively well-versed on matters data.

The options overload goes beyond the right programs to deploy. Data scientists use many strategies and methods to collect, safeguard, and analyze data. Hence, there isn’t a one-size-fits-all approach.

The Solution:

Businesses should exercise caution when dealing with this issue. You shouldn’t try to sift aimlessly via real-time data with any programs that pop up in your Google search. You need the best strategy you can get in order to make the most of your data.

If your company data handling professionals say, data scientists or technicians, consider hiring a professional data firm to help you identify the most appropriate strategy for your firm’s unique goals.

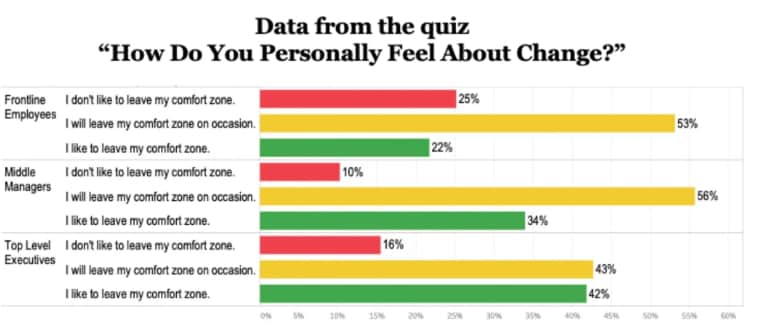

6. Organizational Resistance

Organizational resistance – even in other areas of business – has been around forever. Nothing new here! It is a problem that companies can anticipate and as such, decide the best way to deal with the problem.

If it’s already happening in your organization, you should know that it is not unusual. Of the utmost importance is to determine the best way to handle the situation to ensure big data success.

Image source: Leadership IQ

The Solution:

Companies must understand that developing a database architecture goes beyond bringing data scientists on board. This is the easiest part because you can decide to outsource the analysis part.

Perhaps the biggest challenge entails pivoting the architecture, structure, as well as culture of the company to execute data-based decision-making.

Some of the biggest problems that business leaders have to deal with today include insufficient organizational alignment, failure to adopt and understand middle management, as well as business resistance.

Large enterprises that have already built and scaled operations based on traditional mechanisms find it challenging to make these changes.

Experts advise organizations to recruit strong leaders who understand data and people who proactively challenge existing practices’ status quo and recommend relevant changes. One way to establish this type of leadership is by appointing a chief data officer.

A survey by NewVantage Partners revealed that about 55.9 percent of Fortune 100 companies have brought on board, chief data officers to satisfy this requirement. Is it a trend that other companies can follow? That remains to be seen.

However, even without a CDO, organizations that want to remain competitive in the ever-growing data-driven economy require directors, executives, and managers committed to overcoming their big data challenges.

7. Big Data Handling Costs

The management of big data, right from the adoption stage, demands a lot of expenses. For instance, if your company chooses to use an on-premises solution must be ready to spend money on new hardware, electricity, new recruitments such as developers and administrators, and so on.

Additionally, you will be required to meet the costs of developing, setting up, configuring, and maintaining new software even though the frameworks needed are open source.

On the other hand, organizations that settle for the cloud-based solution will spend on areas such as hiring new staff (developers and administrators), cloud services, development, and also meet costs associated with the development, setup as well as maintenance of the frameworks needed.

In both cases – cloud-based and on-premises big data solutions – organizations must leave room for future expansions to prevent the growth of big from getting out of hand and in turn, becoming too expensive.

The Solution:

Whatever will save your company money is dependent on your business goals and specific technological needs. For example, organizations that desire flexibility usually benefit from cloud-based big data solutions.

On the other hand, firms whose security requirements are extremely harsh prefer on-premises any day.

Organizations may also opt for hybrid solutions where parts of their data are kept and processed in the cloud, with the other part safely tacked away on-premises. This solution is also cost-effective to a certain extent, so we can’t write it off completely.

Data lakes and algorithm optimizations can help you save money if approached correctly. Data lakes come in handy when handling data that need not be analyzed at the moment. Optimized algorithms are the way to go if you are looking for a way to minimize computing power by up to 100 times or even more.

In a nutshell, the secret of keeping the cost of managing big data as minimal and reasonable as possible is by analyzing your company’s needs properly and settling on the right course of action.

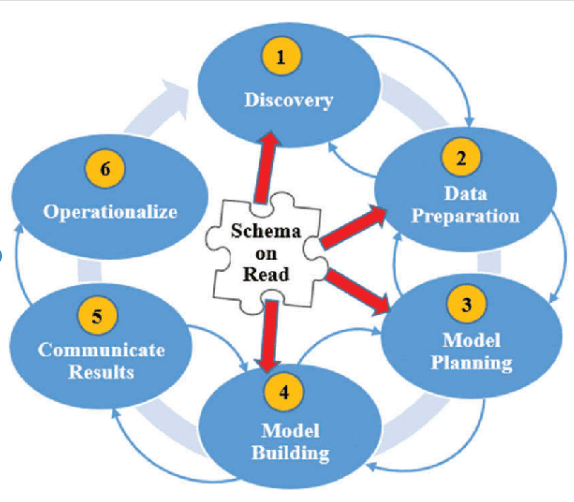

8. Data Integration

Image source: Researchgate

Big data integration revolves around integrating data from multiple business departments into one version of truth useful to every member of the organization.

However, the IT team finds it quite challenging to handle big data originating from many different software and hardware platforms and in all possible forms.

The presence of many unique data processing platforms makes it hard for organizations to simplify their IT infrastructure in their pursuit of easy data handling and big data process flows. The IT departments consider this challenge quite big.

The Solution:

Don’t attempt to go the manual way. It may sound easy and cheaper, but it will not be cost-effective in the long run.

Choose the best software automation tools that come with hundreds of pre-built APIs (for a wide data spectrum), files, and databases. While you may have to hand-develop some APIs on your own at times, you can rely on these tools to do the biggest portion of the work.

9. Upscaling Problems

One of the things that big data is known for is its dramatic growth. Unfortunately, this feature is known as one of the most serious big data challenges.

While the design of your solution is well thought out and, therefore flexible when it comes to upscaling, but the real problem doesn’t lie in the introduction of new processing and storage capacities.

The real problem is in the complexity of upscaling in a way that ensures that the performance of the system does not decline and that you don’t go overboard with the budget.

The Solution:

For starters, make sure that your big data solution’s architecture is decent, as this will save you a lot of problems. Secondly, remember to design the algorithms of your big data with future upscaling needs in mind.

Thirdly, have the necessary plans in place for the maintenance and support of your system to help you address all data growth changes accordingly. Fourthly, encourage systematic performance audits of your system to identify weak spots and address them in good time.

As you can see, there are solutions for the various big data challenges that organizations like yours face. While these challenges may vary from time to time, the key to ensuring the execution of proper solutions is keeping the company’s goals and technological needs in mind.

Big data is here to stay, and the sooner businesses pursue solutions to the associated challenges, the better.